AI Application Protection helps your organization secure your AI-powered systems from new and upcoming threats that target large language models (LLMs), generative AI applications, and APIs containing AI-native information. As you increasingly embed AI into your workflows, these models can become targets for attacks from malicious users who attempt to manipulate prompts, extract sensitive data, or influence model performance.

The AI Application Protection Policies page enables you to define and manage policies that protect your AI assets and services from malicious interactions. These policies enable you to detect and monitor threats specific to AI applications, such as prompt injection, model manipulation, or data exfiltration attempts.

AI Application Protection policies help ensure that your AI assets, such as models and integrated LLMs, operate securely within your applications by automatically identifying high-risk behavior and enforcing custom protection rules.

.png)

AI Application Protection Policies

What will you learn in this topic?

By the end of this topic, you will understand:

The concept of AI Application Protection policies and how they help.

The way to access the AI Application Protection policies page.

The default threat types provided by Traceable and how to manage them.

The steps to add a custom AI Application Protection policy.

Accessing AI Application Protection policies

You can access the AI Application Protection policies page by navigating to Protection → Settings → Policies → AI Application Protection tab. From this page, you can view all existing AI protection rules, manage them, and create new ones.

Understanding AI threat types

By default, Traceable provides multiple AI threat types that you can use to categorize and respond to different risks in AI systems. The following are some threat types:

Threat Types | Description |

|---|---|

Prompt Text Evasion and Misdirection | Prompt Text Evasion and Misdirection refers to attempts to bypass safety and moderation filters by altering or disguising input prompts. Attackers may obfuscate, rephrase, or redirect the prompt to avoid detection. These techniques include encoding sensitive content in other languages, using homoglyphs or slang, and inserting invisible or non-standard characters. They may also rely on ambiguous or misleading phrasing to confuse the model and cause it to generate restricted or harmful output. |

Prompt Injection | Detects and mitigates attempts to manipulate AI models through input prompts. Attackers may try to override your system instructions, extract confidential information, or make the model perform unintended actions. |

PII Detected in Prompt | Identifies when an input contains personal information, such as names, email addresses, phone numbers, or credentials. This rule prevents this data from being unintentionally sent to AI models, reducing the risk of sensitive data exposure and privacy violations. |

Model Governance | Monitors and enforces compliance policies around model usage, configuration, and version control. This rule helps detect unauthorized model updates, unapproved usage, and deviation from compliance standards. This ensures that your AI systems remain compliant with your organizational requirements. |

Code Detected in Prompt | Code Detected in Prompt refers to prompts that contain or attempt to elicit code-related content. These prompts may be used to trick the model into generating functional exploit code. |

AI Rate Limiting | This rule restricts excessive or abnormal usage of AI tools within a defined time window. It prevents resource exhaustion, abuse, or DoS scenarios that could degrade model performance and availability. This ensures the stable and predictable performance of your AI tools under different scenarios. |

AI Input Explosion | AI Input Explosion occurs when prompts are intentionally structured to expand recursively or generate excessive tokens. |

Each threat type contains a set of rules that you can manage according to your requirements and align with your AI security posture.

While Traceable provides predefined rules, you can also define custom rules according to your specific requirements. For more information, see Creating custom AI Application Protection rules.

Key features

The following features are available in the AI Application Protection policies tab:

Features | Description |

|---|---|

Threat Type/Threat Rule List | The list of pre-defined and custom AI Application Protection rules categorized by Threat Types by default, such as Prompt Injection. For information on custom rules, see Creating custom AI Application Protection rules. |

Threat Type/Threat Rule Information | Displays the following details for each threat type and rule:

|

Severity Levels | Displays the severity assigned to the issues detected using the rule, indicating its impact on your application. |

Actions | Displays the action Traceable should take against the threat detected by the rule. The following actions are available for each rule:

While you can configure the above actions for each threat rule, each threat type displays the count of threat rules categorized by the action. For more information, see Policy Management. |

Threat Type Status | Displays the current status of a threat type, Enabled or Disabled.

|

Filtering and Grouping | Filter and/or group rules using the Filter ( |

Creating custom AI Application Protection rules

Creating an AI Application Protection rule enables you to define how Traceable should identify and respond to specific AI-related threats within your application. Each rule specifies a threat type, detection criteria, and the corresponding action to take when Traceable identifies that threat. By creating these rules, you can protect your AI assets according to your requirements, ensuring that LLMs and AI endpoints are monitored and safeguarded against targeted attacks and misuse.

To create a custom AI Application Protection rule, complete the following steps:

Step 1 — Specify the Rule Details

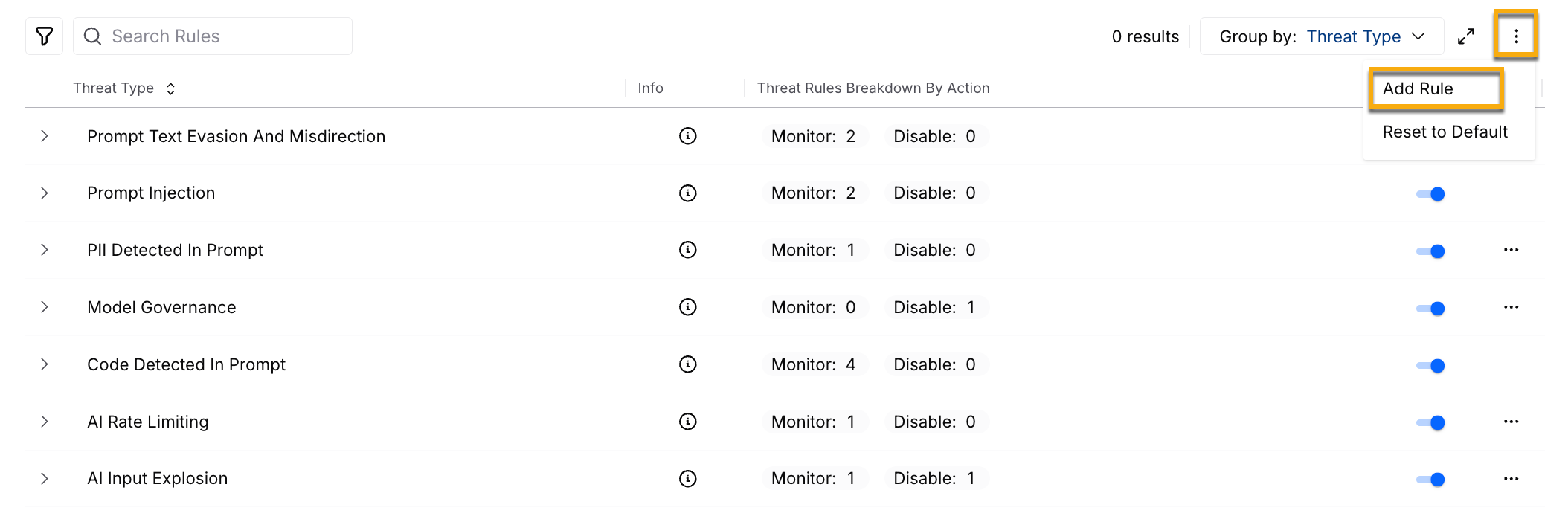

Click the Ellipse (

) icon in the top right corner of the threat type list and click Add Rule.

) icon in the top right corner of the threat type list and click Add Rule.

In the Add Rule pop-up dialog, select the Threat Type from the list of available AI threat categories.

In the Add Rule: <Threat Type> page, do the following:

Specify the Rule Name.

(Optional) Specify the rule Description.

Based on the threat type you selected above, complete Step 2 below.

Step 2 — Specify Rule Criteria

The following tabs highlight the steps to specify the rule criteria. Based on the Threat Type you selected while creating the rule, complete the following steps:

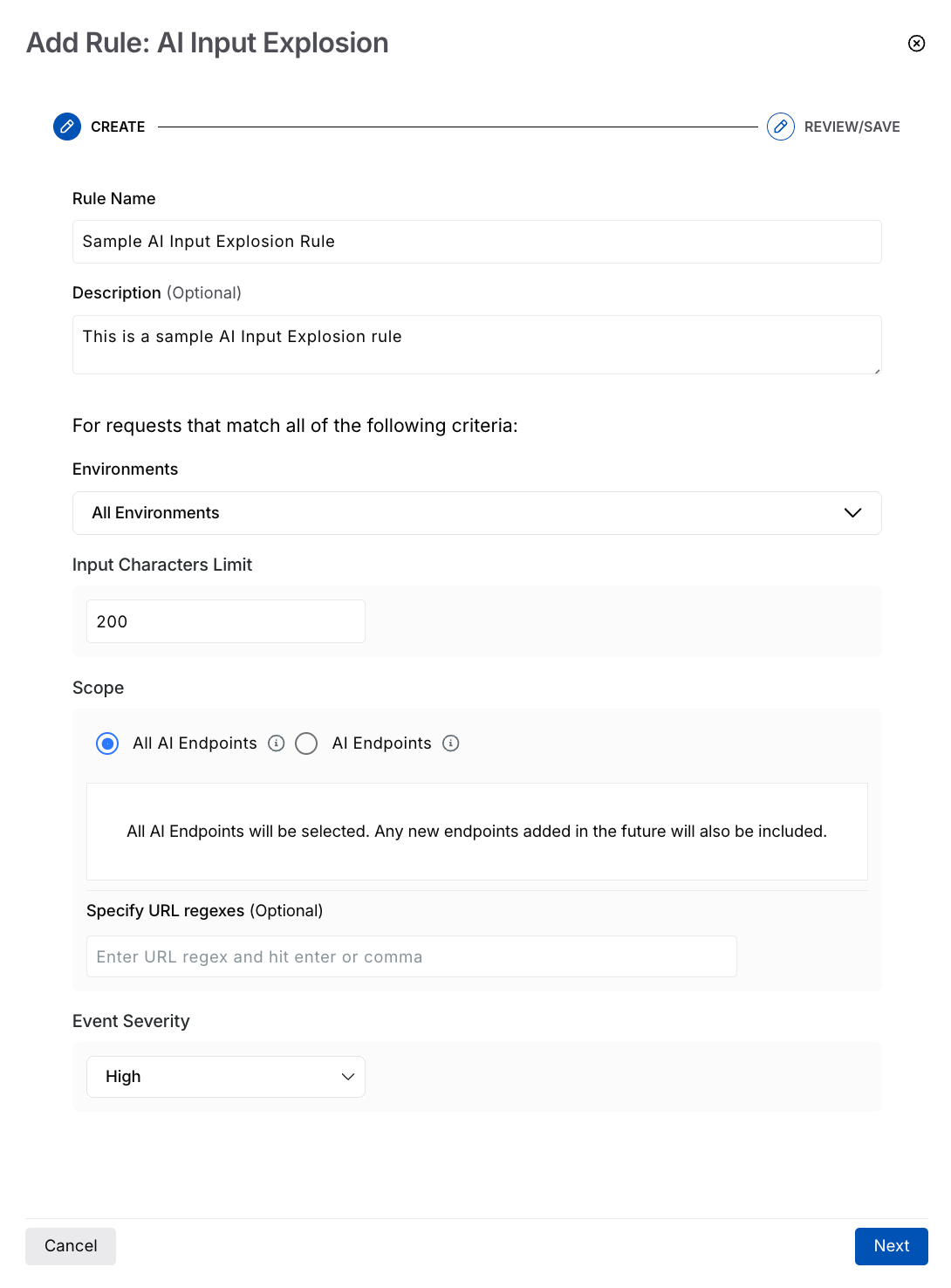

AI Input Explosion

Select the Environment(s) where you wish the rule to apply.

Specify the Input Characters Limit after which Traceable should consider the actor as a threat.

Select the Scope (All or specific AI endpoints) where you wish to apply the rule.

(Optional) Specify URL Regexes if you wish to include specific API endpoints.

Select the Event Severity you wish to apply to the identified event.

Click Create.

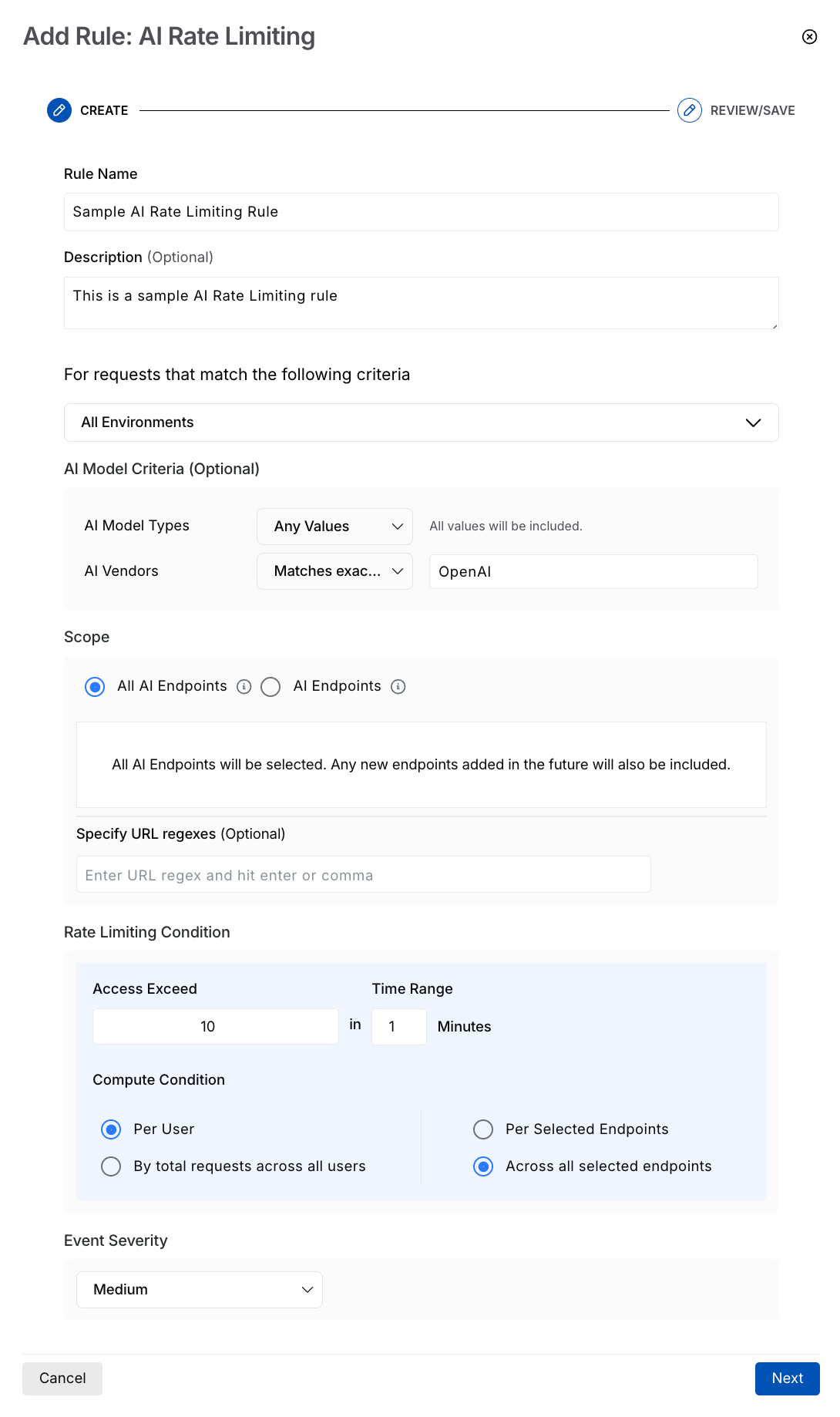

AI Rate Limiting Rule

Select the Environment(s) where you wish the rule to apply.

(Optional) Select the AI Model Criteria based on which Traceable should apply the rule:

AI Model Types — The AI models based on their purpose or functionality.

AI Vendors — The provider or platform behind each AI model or service.

Traceable provides the following operators that you can select from, for the above criteria:

Any values — Includes all available values in the rule.

Matches exactly — Includes the value(s) you specify.

Does not match exactly — Includes all value(s) except the ones you specify.

Contains string — Includes the value(s) that contain the string you specify.

Does not contain string — Includes all value(s) except the ones containing the string you specify.

Matches pattern — Includes all value(s) matching the pattern you specify.

Note

Traceable performs an AND between the criteria above.

The values you specify for the above criteria are case-sensitive.

Select the Scope (All or specific AI endpoints) where you wish to apply the rule.

(Optional) Specify URL Regexes if you wish to include specific API endpoints.

Specify the Rate Limiting Condition based on which Traceable should limit the API requests. This condition consists of the following parameters:

Access Exceed — The number of API requests after which Traceable should limit the user. For example, 50 requests.

Time Range — The duration for which the limit should apply. For example, a 5-minute time range means the API(s) can receive up to 50 requests within this 5-minute window. Once this limit is reached, Traceable limits the user.

Compute Condition — A combination of conditions on which the above limits should apply:

Per user and Per selected endpoints — If a user exceeds the above-set limit for an API endpoint, Traceable implements the action you select for the threat type.

Per user and Across all selected endpoints — If a user exceeds the above-set limit across all selected API endpoints, Traceable implements the action you select for the threat type.

By total requests across all users, and Per selected endpoints — If all users collectively exceed the above set limit for an API endpoint, Traceable implements the action you select for the threat type, for all users. For example, you configure a rule to limit requests to 100 in 1 minute for an API. Then, if there are five users, and each user sends 20 or more requests per minute to that API, Traceable implements the action you select for the threat type.

By total requests across all users, and Across all selected endpoints — If all users collectively exceed the above set limit across all selected API endpoints, Traceable implements the action you select for the threat type, for all users.

Select the Event Severity you wish to apply to the identified event.

Click Next.

Review the details you specified above, and click Submit.

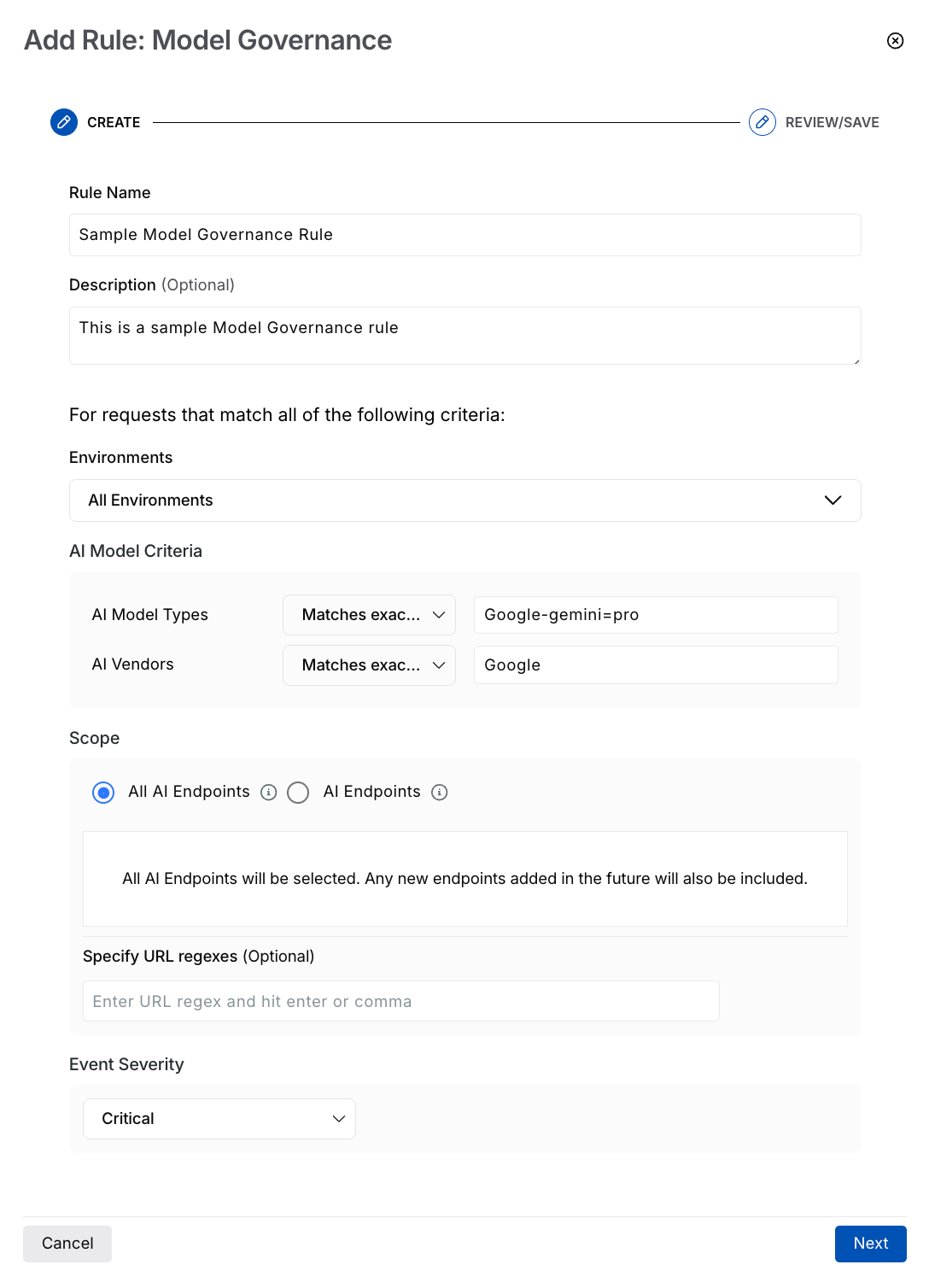

Model Governance Rule

Select the Environment(s) where you wish the rule to apply.

Select the AI Model Criteria based on which Traceable should apply the rule:

AI Model Types — The AI models based on their purpose or functionality.

AI Vendors — The provider or platform behind each AI model or service.

Traceable provides the following operators that you can select from, for the above criteria:

Any values — Includes all available values in the rule.

Matches exactly — Includes the value(s) you specify.

Does not match exactly — Includes all value(s) except the ones you specify.

Contains string — Includes the value(s) that contain the string you specify.

Does not contain string — Includes all value(s) except the ones containing the string you specify.

Matches pattern — Includes all value(s) matching the pattern you specify.

Note

Traceable performs an AND between the criteria above.

The values you specify for the above criteria are case-sensitive.

Select the Scope (All or specific AI endpoints) where you wish to apply the rule.

(Optional) Specify URL Regexes if you wish to include specific API endpoints.

Select the Event Severity you wish to apply to the identified event.

Click Next.

Review the details you specified above, and click Submit.

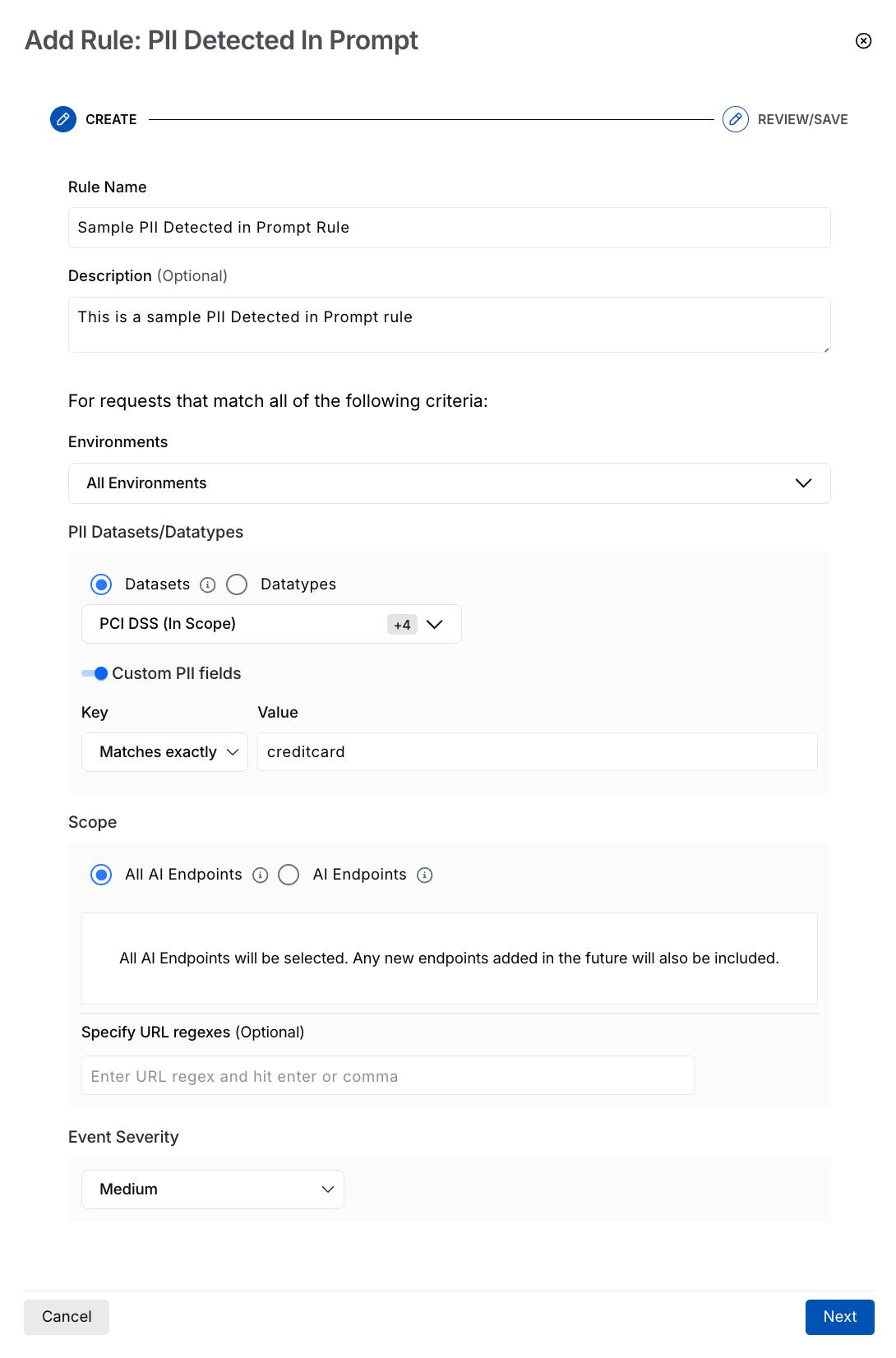

PII Detected in Prompt Rule

Select the Environment(s) where you wish the rule to apply.

Select the PII Datasets or Datatypes Traceable should check for, in the prompt.

(Optional) Enable the Custom PII fields toggle and specify any custom field key and value that Traceable should check for.

Select the Scope (All or specific AI endpoints) where you wish to apply the rule.

(Optional) Specify URL Regexes if you wish to include specific API endpoints.

Select the Event Severity you wish to apply to the identified event.

Click Next.

Review the details you specified above, and click Submit.

AI policy management

Policy management follows a hierarchical structure in Traceable, enabling or disabling policies at an environment or granular level. You can manage components within the AI Application Protection policy tab at the following levels:

.png)

AI Policy Management

Environment Level — You can select the environment from the page’s top right corner and enable or disable the policy from the Status drop-down at the top of the tab. This enables or disables all threat types collectively on the selected environments.

Threat Type Level — You can use the Toggle next to the threat type to enable or disable the threat rules under it. This enables or disables all the threat rules under it.

Threat Rule Level — You can use the Action drop-down corresponding to a threat rule to enable (Monitor), disable (Disable), or mark it for testing (Mark for Testing).

While enabling the AI Application Protection policies at the environment level enables all threat types, you can also enable or disable the individual threat types or rules according to your requirements. Similarly, when you enable a threat type, you can manage the rules independently.

Note

Any change at either of the above levels in All or specific environments is called an override. For more information, see Understanding Overrides.

Actions on AI policies

Traceable allows you to carry out various actions on the threat rules available in the following threat types:

PII Detected in Prompt

Model Governance

AI Rate Limiting

AI Input Explosion

You can carry out the following actions on the pre-defined and custom threat rules:

View Rule — View the rule details without modifying them.

Edit Rule — Modify the details specified in the rule, for example, datasets in a PII Detected in Prompt threat type.

Reset Rule (pre-defined rules only) — Reset the rule to the default configuration specified by Traceable.

Delete Rule (custom rules only) — Delete the rule.

Note

Deleted rule(s) cannot be restored.